Four weeks of voice computing - here's what I learnt

After a recent flare-up of RSI-related wrist pain, I decided to make a serious attempt at becoming proficient at speech computing. My hope was to be able to add an alternative input method in order to offload my hands, and allow them some rest even during my daily work. I’m now four weeks in and this post summarises my impressions. The journey has had its fair share of frustrations, but also brought some surprising insights.

I remembered recently coming across a blog post where Salma Alam-Naylor described her initial foray into voice coding. Taking that as a starting point, I dove in.

Navigating a new world

Before I could even think about coding, I had to start by figuring out how to just navigate around my computer. I spent a few hours trying out Apple’s built-in Voice Control system but soon realised it was woefully inadequate. While it worked nicely in Apple’s own applications, of which I use very few, it just plain refused to engage in many others like Firefox and Slack.

But one neat feature that Apple’s Voice Control has (when it works) is the “show numbers” command, which labels each clickable element on the screen with a number, that you can then speak to click it. Fortunately there’s a third-party application called Shortcat, which enables this functionality across all apps, and sports possibly the cutest app icon ever seen among Mac apps. I promptly installed it and it quickly became an important part of my workflow.

In her blog post, Salma talked about Talon and Cursorless as being the state of the art in this space, so that was naturally where I headed next.

Talon as the foundation

Talon is a strange beast. Its modest and barebones website betrays little of its power, nor provides much in the way of instruction on its use. At first sight I almost thought the project had been abandoned, but luckily I headed over to its Slack, where I found a highly active and helpful community.

What Talon provides is the core engine for a scriptable command-based speech recognition system. The word command-based is key here. This is what sets it apart from other common dictation systems, such as Dragon or Whisper, which are mostly designed for transcribing longer passages of dictated text. Talon, on the other hand, has been tuned for working with short commands that trigger actions.

To get Talon to do anything useful, we need to supply it with scripts. Fortunately, the user community has developed a vast library of different voice commands for a large number of use cases and applications.

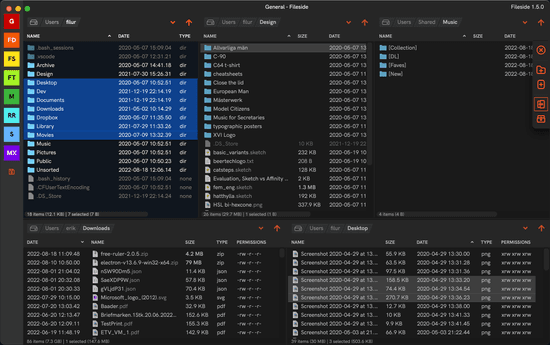

Installing and configuring this library requires some familiarity with software development tools like Git. Despite potentially being a very useful tool also for a general audience, the Talon ecosystem does require you to be a fairly technical user, at home in a terminal tinkering around with config scripts and Python code.

On the whole, operating a Talon installation feels a bit like maintaining a Linux machine – highly configurable and versatile, but requiring ongoing effort.

Getting started with Talon

In addition to the official Talon website, there is a community wiki with helpful guides on getting started with basic commands and customising your setup. It only touches on a fraction of the capabilities that Talon together with the community set provides though.

So once I had the basics down, I went looking for more comprehensive documentation of the available commands. I eventually stumbled upon this cheatsheet PDF, which I eagerly proceeded to print out before realising it was more of an operating manual at 37 pages long than a simple cheatsheet! Anyhow, it was a hugely useful resource for getting an overall feel for all the things the community commands can do.

I can’t claim to have more than scratched the surface of these capabilities, but I’ve already discovered a lot of useful commands for driving many of the applications I use on a daily basis.

Crucial early lessons

When you first start speaking into Talon it can seem to have a mind of its own. Sometimes you say something and nothing appears on the screen at all. At other times, it starts spewing out a bunch of complete gibberish.

Turns out there’s a few crucial concepts you must grasp to wrestle control of this thing. Building up a good mental model is particularly important with this stuff, since you don’t have much visual feedback of what’s going on.

Commands versus dictation

By default, Talon operates in command mode. This means that it will try to match anything you say to a predefined command from the vocabulary. So if the word that’s displayed on the screen is not actually what you spoke, it’s probably because your word is not in the vocabulary and what you see just happens to be the closest matching command. It does not necessarily mean that your speech was misheard. This tripped me up in the beginning before I realised what was going on.

To have Talon transcribe exactly what you say, you have to go into dictation mode. In this mode it behaves like most other speech-to-text systems. You can also go into dictation mode temporarily by using commands like “say <phrase>” or “sentence <phrase>”, which will switch back into command mode as soon as you finish your phrase.

Speech timeout

In command mode, Talon has a default speech timeout of only 0.3s, which means that it will try to execute your command after 0.3s of silence. This can get especially hairy when using temporary dictation commands like “say”, where the slightest of pauses exits dictation mode and whatever subclause you happen to utter next gets interpreted as a command instead, potentially wreaking havoc in your editor.

Talon is extremely versatile, and lets you tweak pretty much everything. The speech timeout is no exception. I started by bumping it up to 0.5s for a slightly less stressful experience.

Sleep

Out of the box, Talon is always listening. The phrase “go to sleep” will disable it, and “wake up” will activate it again. Forgetting to send it to sleep can cause all sorts of chaos, if you for example start a conversation with someone else in the room or start playing a video. Since I mostly work from home, this has been manageable, but my partner has had to learn the hard way to initiate any conversation with the words “go to sleep”. If you really want to have some fun, start watching a Talon tutorial video with Talon awake. No surer way to make all hell break loose!

Mishearing woes

The first few days were undeniably full of frustration. Yet, I was surprised at how quickly the commands and the special phonetic alphabet started falling into place with repeated use. When things flowed it felt very satisfying.

By far the biggest pain point though, was speech recognition accuracy. Seems that recognising short out-of-context words is still quite a tall order for speech recognition models. It’s easy to lose your patience when the engine repeatedly misidentifies a command you are trying to give, and inadvertently deletes a whole chunk of your document instead. Sometimes you just have to remind yourself to sit back and take a deep breath, and focus on practicing patience. Blurting out obscenities with a speech engine running is unsurprisingly even more counterproductive than usual.

This led me to embark on my first side quest: improving recognition accuracy. There were two paths to explore. On the one hand, we could take the hardware route, and invest in a good headset microphone, with the aim of increasing the fidelity of the audio that gets fed to the model. On the other, we could try tweaking the vocabulary to reduce the likelihood of collisions and misidentifications.

Buying a microphone

The built-in MacBook microphone works reasonably well, although with an error rate that’s a little bit too high for comfort. Its ability to pick up other voices in the room doesn’t help either. Getting a directional microphone positioned closer to the mouth seemed like a worthwhile upgrade.

So I went looking for a light headset-mounted microphone, without a pair of unnecessary headphones coming along for the ride. This was easier said than done. Most products on the market are either headsets combining headphones with a mic, or standalone desktop mics.

Microphone-only headsets seem confined to the pro audio niche, often with XLR connectors which then need an audio interface to plug into as well. I did find a Sennheiser one with a jack plug from Thomann at around €150, which I ordered, but had to send back because the signal level was too low for a laptop.

In the end, I ordered a €60 Bluetooth headset called Avantalk Lingo on a whim, with small open ear headphones and a mic, and that’s what I’m currently using. It’s far from perfect, but it’s certainly an improvement over the laptop mic, and good enough for daily use.

I have to say though, the background noise suppression is surprisingly good on this thing. I can play music out loud without it barely even registering on the input signal from the mic.

I’m sure it’s possible to improve accuracy further by spending more, but I also suspect that it is a path of diminishing returns in terms of price/performance ratio. Some members of the Slack forum have gone all in on this route, investing in tiny €800 DPA microphones, which supposedly are the gold standard for speech recognition. Personally, I was happy to explore some other options before resorting to that.

Tweaking the vocabulary

The most fruitful path I found so far, has been to customise the vocabulary to reduce the likelihood of mishearings. My approach here has been to identify the spoken commands that most frequently misfire, and either change them to a different word or try to find an alternative spelling that is more reliably recognised. Alternatively, to just remove the misidentified command if it’s one that I don’t need.

A disclaimer is in order. There might well be smarter ways to achieve this with Talon that I’m not yet aware of, but this is how I’ve been handling it so far.

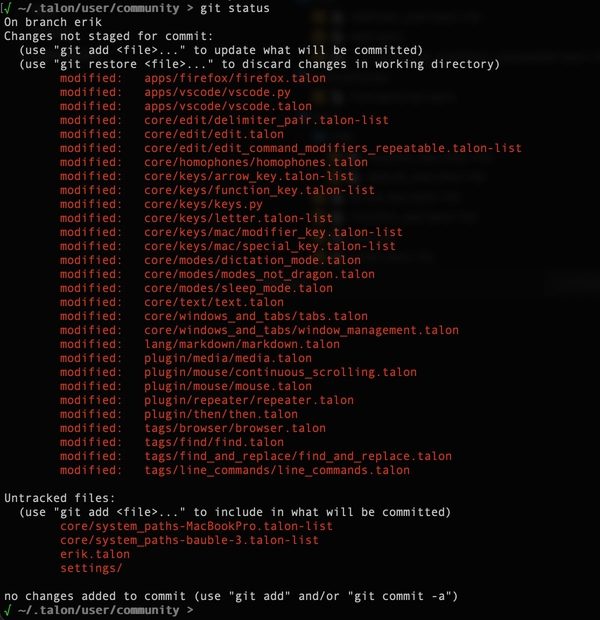

Customising Talon

Command mappings are stored in files written in a custom YAML-like format with extensions .talon or .talon-list that live under the ~/.talon/user directory. Most spoken phrases can be found somewhere in a file of one of these two types. There are some commands, however, that can only be changed inside Python code files, such as the words used for punctuation and special characters. The workflow I’ve arrived at here is to just keep the Talon community folder open in a separate VSCode window, and do global text searches in there until I find the command in question.

In .talon files we can use a pattern matching syntax to conveniently capture variants or optional words in a phrase, e.g. go [to] (tab | tub) <number>: doSomething() will match any utterance like “go tab 2”, “go to tub 8”, “go tub 5” etc. Note that this only works in .talon files, not in .talon-lists. Commands can be disabled by prefixing them with a #, analogous to a Python comment.

A gotcha to be aware of here is that some Talon actions are internally just emitting keyboard shortcuts, so if you have modified the default shortcuts in any of the applications you use, this might be the reason why a particular command fails.

Pronunciation matters

It became apparent pretty quickly that some words were more problematic than others. As a Swede who spent many years in the UK, my English accent lies closer to the British pronunciation (BE) than the American one (AE), and while the speech engine in Talon in theory is capable of capturing all accents, in practice it does tend to favour AE in ambiguous cases.

Here’s a recording of me saying the words “harp” and “tab” each four times in my usual accent, and then four times in my best attempt at putting on an American accent.

The alphabet

To input individual characters, Talon community uses a custom alphabet of easily distinguishable monosyllabic words. Some of the default mappings in the alphabet rely on AE pronunciation, especially ones including an “r” in a position where it’s usually silent in BE.

So one of the first things I took the scalpel to was the alphabet. Not all changes are related to recognition accuracy, some just sat better with me. In general, it seems like including sharp consonants like “t”, “s” and “k” have higher chances of success than softer ones like “b” or “p”.

| Letter | Default | Modified | Rationale |

|---|---|---|---|

| A | air | act | Not recognised without pronouncing the “r”. |

| B | bat | bad | Reduce collisions with “dot”. |

| C | cap | cat | Just so we can have a cat. |

| D | drum | drum | |

| E | each | elk | Increase animal quota further. |

| F | fine | face | To free up “find” for use as a command. |

| G | gust | gust | |

| H | harp | hill | The “r” thing again. |

| I | sit | ink | My brain balked at using a word starting with “s” for “i”. |

| J | jury | job | Two syllables? We can do better. |

| K | crunch | kid | Again, “c” for “k”? |

| L | look | look | |

| M | made | made | |

| N | near | nip | “r” thing. |

| O | odd | odd | |

| P | pit | pit | |

| Q | quench | quench | |

| R | red | red | |

| S | sun | sun | |

| T | trap | trap | |

| U | urge | use | Hard to pronounce, plus “r”. |

| V | vest | void | No idea why, just like “void” better. |

| W | whale | whale | |

| X | plex | plex | Words starting with “x” are rare, so stuck with it. |

| Y | yank | yank | |

| Z | zip | zip |

The keyboard

Other frequently used commands relate to special keys on the keyboard. Here I’ve also ended up with considerable customisation after four weeks of use.

| Key | Default | Modified | Rationale |

|---|---|---|---|

| Enter | enter | slap | Constant misidentification |

| Control | control | troll | For brevity |

| Option | option | (chen | chun | chon) | For brevity |

| Command | command | man | For brevity |

| Tab | tab | took | Constant misidentification |

| Backspace | delete | back | Personal preference |

| Delete | forward delete | pinch | Personal preference |

| Up arrow | up | sup | Frequent misidentification |

| Down arrow | down | don | Frequent misidentification |

| Home | home | homey | Frequent misidentification |

| End | end | endy | Frequent misidentification |

| Colon | colon | cold cut | Constant misidentification |

| Semicolon | semicolon | semi | For brevity |

Other commands

Most of the other command modifications I’ve made are more subjective. The word “tab” deserves a special mention though. I found that it was very often misidentified when I spoke it, so I decided to separate it into two distinct commands: “took” for the Tab key, and a compound command of variants “(tab | tub | top)” for a browser tab. That combo seems to work reasonably well for capturing my pronunciation of the word “tab”.

I had similar issues with the words “down” and “up” at times, so have replaced them with “don” and “sup” in all contexts where they occur. One cool thing is that you don’t need to restrict your commands to words that actually exist, but can make up new ones as long as Talon can guess at the pronunciation from their spelling.

An iterative process

All this tweaking is still very much a work in progress and mappings change on a daily basis. I always keep a notepad by my side as I go along, where I note down misidentifications and issues as they occur. Then every so often I take some time out to go over my list, and see how things can be improved by tweaking the community set. Rinse & repeat.

At this point I have a sprawling changeset spread over many different files. I am yet to find a way of making this more manageable. (One idea I’d like to explore is to write a script to automatically add a tag: never header to all the default .talon files, and then redefine only the commands that I actually use in a single override file.)

As for learning, this stuff seems niche enough that our marvellous new LLM companions aren’t always that helpful. This makes the Talon Slack community full of friendly humans an invaluable resource.

Tackling actual coding

For the first week I stuck to Talon only. But trying to edit code was pretty excruciating, even with the new skills I had started to hone. It was time for the second side quest.

Enter Cursorless. It’s described as “a spoken language for rapid, structurally aware voice coding”. What it really is though, is an ingeniously brilliant piece of orthogonal thinking, invented by one Mr Pokey Rule.

For a good introduction, I highly recommend watching Pokey’s engaging Strange Loop talk on Cursorless.

Levelling up with Cursorless

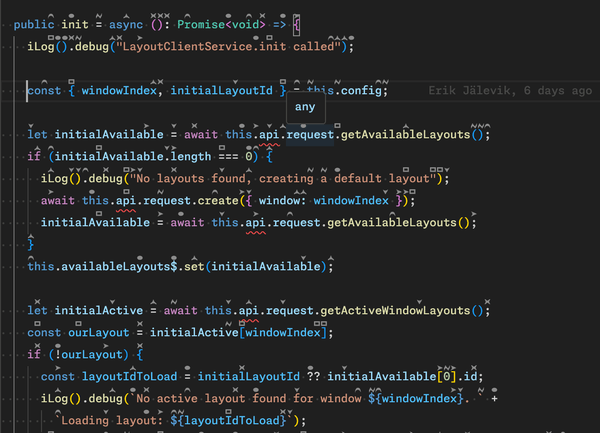

Briefly put, Cursorless works by building an abstract syntax tree of your file, which it then uses to add decorations, known as hats, above the words and symbols (tokens) of your text. These hats in conjunction with the Talon alphabet you’ve by now hopefully internalised, combined with modifiers and actions, will allow you to dice and slice your source text with remarkable precision and conciseness using only your voice.

As an example, you might say “chuck funk blue whale” to delete a function within which a token with a blue hat over the character “w” (“whale” in the Talon alphabet) can be found. It takes some getting used to, but starts to feel quite natural with a bit of practice.

The structure-specific aspects of the language, which allow you to target specific nodes in a syntax tree (i.e. “funk” above), are only available for a set number of programming languages. But Cursorless is useful even without these aspects - you just have slightly fewer structural modifiers to work with. I am for example, editing this blog post using Cursorless commands. One limitation is that Cursorless is currently only available as a plugin for VSCode and its forks, so you need to be editing your text in one of those programs.

The concept as such need not necessarily be restricted to voice control, as from a more general perspective, it’s really a system for hacking away directly at an AST rather than on its textual representation at the granularity of individual characters. This is a powerful idea, which the Cursorless team seems to have realised as they are actually working on a keyboard-based version.

Learning yet another language

So now I have to learn another language just to code in my usual language, you may object. Yes, I’m afraid you do.

But it’s a much easier language to learn than a traditional programming language. The main effort involved in learning it is to grasp a set of concepts, like targets, modifiers and actions, and memorise their names. Fortunately, these concepts map quite intuitively to the grammatical structure of spoken English. It’s clear that much thought has gone into designing a consistent and coherent grammar, giving rise to an excellent user experience.

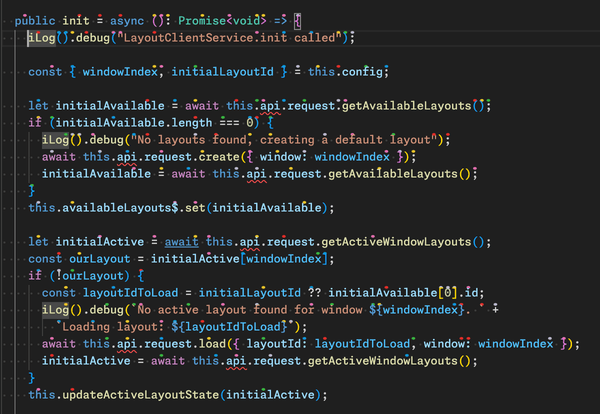

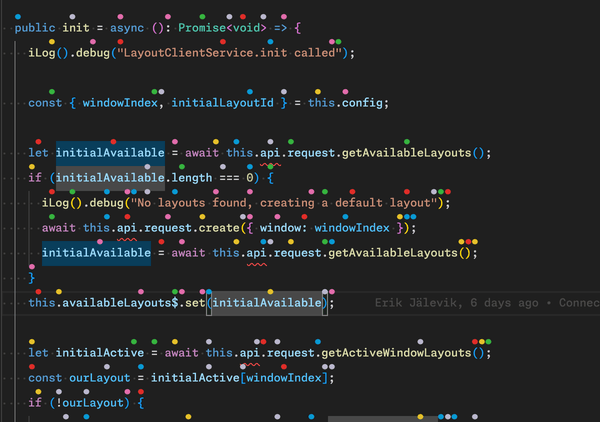

Visual distraction

One of the hardest things to get used to was all the visual noise added by the colourful dancing hats on top of my code. Especially together with syntax colouring plus all the different squiggles and marks coming from Intellisense, Typescript, the linter etc. It didn’t help that I had to increase the size of the hats and the line height considerably to be able to reliably distinguish the different colours from each other when sitting at a comfortable distance from the screen.

Fortunately, just like with Talon, everything can be customised. My first angle of attack involved tweaking the colours to make them a little less saturated, as well as changing the red to brown, to make actual compiler errors easier to spot. Which helped somewhat, but didn’t do much in the way of combatting the visual noise.

In addition to coloured blobs, we can also enable a number of different hat shapes. My initial experiments with this did not really improve things much, until I decided to try eliminating colours altogether, letting all hats render in a medium greytone (hsl(0, 0%, 55%)), while at the same time enabling as many different hat shapes as I could realistically distinguish from each other.

And this really hit the sweet spot in terms of finding a balance between visual distraction and precision. Because the thing is, without all the different colours, the algorithm has fewer overall hats to distribute across the tokens, so you get fewer tokens “hatted” than before. But it seemed a tradeoff worth making. In addition, I was able to reduce the hat size and line height settings considerably, as I discovered that I find it easier to distinguish between different shapes than between different colours at small scales. The eye often needs a bit of surface area to perceive a colour accurately.

Of course being the tinkerer I am, I couldn’t stop myself from renaming some of the hat shape names as well. Here’s the current set of hats I’m working with.

| Hat shape | Default | Modified | Rationale |

|---|---|---|---|

|

The default shape has no name, you just speak its letter. | ||

|

bolt | flash | Better accuracy, and “flash” was the first word that came to mind when seeing this shape. |

|

curve | roof | The “r” thing again, and “roof” just felt natural. |

|

fox | fox | |

|

frame | frame | |

|

play | beak | Wanted to avoid confusion with the media play command, and “beak” fits the shape well. |

|

wing | wing | |

|

ex | cross | In my Talon config I have “ex” as a synonym for “times”, so wanted to avoid ambiguity. |

The remaining hat shapes often look a bit too similar to one of the above at smaller sizes, so I’ve left them out.

Vocabulary

The default vocabulary used in Cursorless is ontologically elegant, but some metaphors didn’t really click for me. In particular the use of words like “drink”, “pour”, “float” etc for actions related to inserting lines in various ways, I found difficult to memorise. So here I settled on “wedge” to insert a line above, and “bleed” to insert a line below a target.

I was also constantly mixing up the words “line” and “row”, which refer to the same thing in different contexts, row being a special kind of target used with a row number, and line being a modifier. I found that just renaming “row” to “line” did not introduce any ambiguities in the grammar, and drastically reduced mental load.

And “past” had to become “beyond”, to prevent frequent misidentifications as “post”.

Another source of confusion, at least for me, is areas where both Talon’s community set and Cursorless offer similar commands. They both provide commands for selecting, copying, pasting, line editing etc, but with slightly different semantics. I suspect that the sensible thing to do here would be to use only one or the other. However, going all in on memorising only the Cursorless versions of these commands could be problematic as you will still need the Talon versions in contexts outside of VSCode.

By the way, vocabulary customisation is another area where the UX of Cursorless shines. All configurable commands have been exposed as a separate set of external CSV files, where you can modify, override or disable commands without having to do surgery on the internals of a Git repo. And the cheatsheet invokable by “cursorless cheatsheet” even updates dynamically to include your own tweaks.

Unexpected joys

Even though this exploration was forced upon me through debilitation, it’s also been a source of some unexpected delights that I would never have encountered had I stayed in the local maximum that is mouse and keyboard control.

One is the realisation of what an improvement natural language is over cryptic keyboard shortcuts as a source set for mapping human input to machine action. Remembering keyboard shortcuts is hard because they are so arbitrary. Remembering words and phrases on the other hand, is what our brains were evolved to do. The potential power of opening this space up to the whole of human language has been a bit of a revelation.

Another is the precise method of editing Cursorless affords by pruning an abstract syntax tree instead of editing a linear sequence of characters. This seems powerful enough that I think I would continue to use it even if my RSI went away.

A large class of typos also gets eliminated. Once a speech engine correctly identifies a word, it’s never going to misspell it. Typos will instead generally consist of a completely different word, which will be easier to spot while editing.

Last but not least, there’s a certain childlike glee to be had from finding oneself uttering unexpected phrases like “man slap”, “puff beak face” or “chuck paint fox trap”.

Lingering sorrows

While I feel like I’ve come quite a long way already, there are still many areas where I’m totally lost without a mouse or a keyboard. Voice control still makes for a poor replacement for mouse-heavy workflows, like image, audio or video editing. And even such an apparently simple task as scrolling provides its own challenges without a mouse or touchpad. Anything that requires precise mouse movements is generally extremely cumbersome.

I’m lucky enough to still have a pair of somewhat usable hands to fall back on when needed. For those needing to go fully hands-free, there are further avenues to explore, such as using a special eye-tracking device to control the mouse pointer, or incorporating noises like hissing, clicking and popping sounds. All this is also supported by Talon.

The verdict

Overall, I’m positively surprised at how usable voice control is today, and how proficient I was able to get in four weeks. Of course I can’t claim to be working at the same speed as with keyboard and mouse, yet, but at least the speed reduction is not so drastic as to cause me to feel completely unproductive.

When it comes to coding specifically, another thing that really helps is how capable AI coding agents like Cursor (contrary as it might seem, Cursor and Cursorless will not cancel each other out to zero) have recently become, in that they reduce further how much actual typing you need to do in the first place.

The biggest pain point remains misidentified commands. The main route to working faster with voice is to batch up a sequence of commands into one utterance. Only trouble is that the longer you make a string of commands, the higher the risk that a misheard one slips through and messes up your editor.

Muscle memory is also a hard foe to beat. There’s no doubt that switching to an entirely new input method requires a lot more cognitive effort, or system 2 activation to use Daniel Kahneman’s term, compared to the deeply ingrained habits of keyboard and mouse use. Becoming successful requires discipline and patience, resisting the urge to just grab the mouse and instead taking the time to figure out the most appropriate voice command for the job.

This exploration also powerfully hit home the point about how diversity and disability can be fuel for innovation. Cursorless would never have seen the light of day if it hadn’t been for a physical disability. DEI vs MAGA 1-0.